In the mid-1800s a caterpillar the size of a human finger began spreading across the northeastern U.S. This appearance of the tomato hornworm was followed by terrifying reports of fatal poisonings and aggressive behavior toward people. In July 1869 newspapers across the region posted warnings about the insect, reporting that a girl in Red Creek, N.Y., had been “thrown into spasms, which ended in death” after a run-in with the creature. That fall the Syracuse Standard printed an account from one Dr. Fuller, who had collected a particularly enormous specimen. The physician warned that the caterpillar was “as poisonous as a rattlesnake” and said he knew of three deaths linked to its venom.

Although the hornworm is a voracious eater that can strip a tomato plant in a matter of days, it is, in fact, harmless to humans. Entomologists had known the insect to be innocuous for decades when Fuller published his dramatic account, and his claims were widely mocked by experts. So why did the rumors persist even though the truth was readily available? People are social learners. We develop most of our beliefs from the testimony of trusted others such as our teachers, parents and friends. This social transmission of knowledge is at the heart of culture and science. But as the tomato hornworm story shows us, our ability has a gaping vulnerability: sometimes the ideas we spread are wrong.

In recent years the ways in which the social transmission of knowledge can fail us have come into sharp focus. Misinformation shared on social media websites has fueled an epidemic of false belief, with widespread misconceptions concerning topics ranging from the COVID-19 pandemic to voter fraud, whether the Sandy Hook school shooting was staged and whether vaccines are safe. The same basic mechanisms that spread fear about the tomato hornworm have now intensified—and, in some cases, led to—a profound public mistrust of basic societal institutions. One consequence is the largest measles outbreak in a generation.

“Misinformation” may seem like a misnomer here. After all, many of today’s most damaging false beliefs are initially driven by acts of propaganda and disinformation, which are deliberately deceptive and intended to cause harm. But part of what makes disinformation so effective in an age of social media is the fact that people who are exposed to it share it widely among friends and peers who trust them, with no intention of misleading anyone. Social media transforms disinformation into misinformation.

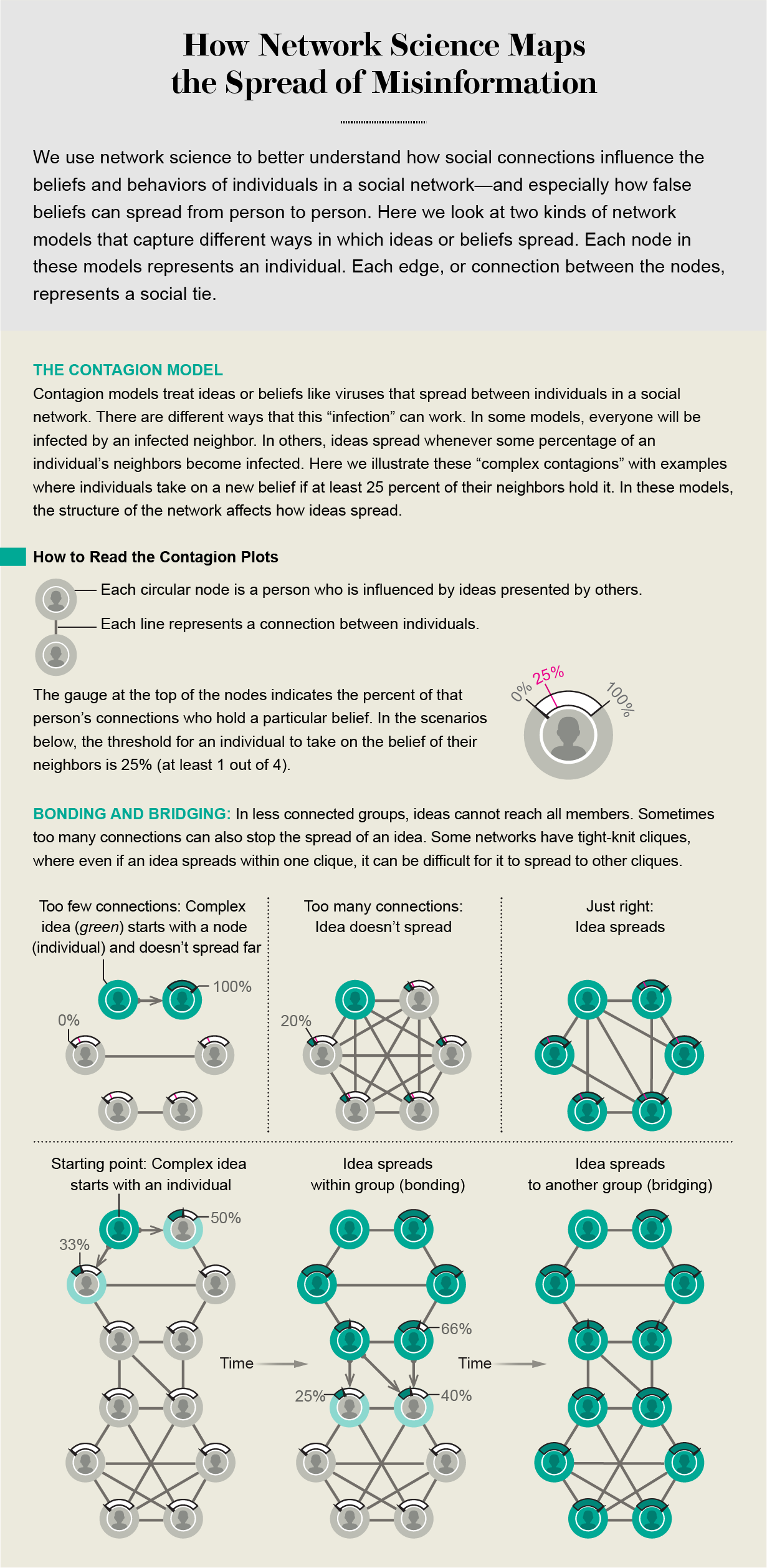

Many communication theorists and social scientists have tried to understand how false beliefs persist by modeling the spread of ideas as a contagion. Employing mathematical models involves simulating a simplified representation of human social interactions using a computer algorithm and then studying these simulations to learn something about the real world. In a contagion model, ideas are like viruses that go from mind to mind. You start with a network, which consists of nodes, representing individuals, and edges, which represent social connections. You seed an idea in one “mind” and see how it spreads under various assumptions about when transmission will occur.

Contagion models are extremely simple but have been used to explain surprising patterns of behavior, such as the epidemic of suicide that reportedly swept through Europe after publication of Goethe’s The Sorrows of Young Werther in 1774 or when dozens of U.S. textile workers in 1962 reported suffering from nausea and numbness after being bitten by an imaginary insect. They can also explain how some false beliefs propagate on the Internet. Before the 2016 U.S. presidential election, an image of a young Donald Trump appeared on Facebook. It included a quote, attributed to a 1998 interview in People magazine, saying that if Trump ever ran for president, it would be as a Republican because the party is made up of “the dumbest group of voters.” Although it is unclear who “patient zero” was, we know that this meme passed rapidly from profile to profile.

The meme’s veracity was quickly evaluated and debunked. The fact-checking website Snopes reported that the quote was fabricated as early as October 2015. But as with the tomato hornworm, these efforts to disseminate truth did not change how the rumors spread. One copy of the meme alone was shared more than half a million times. As new individuals shared it over the next several years, their false beliefs infected friends who observed the meme, and they, in turn, passed the false belief on to new areas of the network.

This is why many widely shared memes seem to be immune to fact-checking and debunking. Each person who shared the Trump meme simply trusted the friend who had shared it rather than checking for themselves. Putting the facts out there does not help if no one bothers to look them up. It might seem like the problem here is laziness or gullibility—and thus that the solution is merely more education or better critical thinking skills. But that is not entirely right. Sometimes false beliefs persist and spread even in communities where everyone works very hard to learn the truth by gathering and sharing evidence. In these cases, the problem is not unthinking trust. It goes far deeper than that.

Trust the Evidence

Before it was shut down in November 2020, the “Stop Mandatory Vaccination” Facebook group had hundreds of thousands of followers. Its moderators regularly posted material that was framed to serve as evidence for the community that vaccines are harmful or ineffective, including news stories, scientific papers and interviews with prominent vaccine skeptics. On other Facebook group pages, thousands of concerned parents ask and answer questions about vaccine safety, often sharing scientific papers and legal advice supporting antivaccination efforts. Participants in these online communities care very much about whether vaccines are harmful and actively try to learn the truth. Yet they come to dangerously wrong conclusions. How does this happen?

The contagion model is inadequate for answering this question. Instead we need a model that can capture cases where people form beliefs on the basis of evidence that they gather and share. It must also capture why these individuals are motivated to seek the truth in the first place. When it comes to health topics, there might be serious costs to acting on false beliefs. If vaccines are safe and effective (which they are) and parents do not vaccinate, they put their kids and immunosuppressed people at unnecessary risk. If vaccines are not safe, as the participants in these Facebook groups have concluded, then the risks go the other way. This means that figuring out what is true, and acting accordingly, matters deeply.

To better understand this behavior in our research, we drew on what is called the network epistemology framework. It was first developed by economists more than 20 years ago to study the social spread of beliefs in a community. Models of this kind have two parts: a problem and a network of individuals (or “agents”). The problem involves picking one of two choices. These could be “vaccinate” and “don’t vaccinate” your children. In the model, the agents have beliefs about which choice is better. Some believe vaccination is safe and effective, and others believe it causes autism. Agents’ beliefs shape their behavior—those who think vaccination is safe choose to perform vaccinations. Their behavior, in turn, shapes their beliefs. When agents vaccinate and see that nothing bad happens, they become more convinced vaccination is indeed safe.

.png)

The second part of the model is a network that represents social connections. Agents can learn not only from their own experiences of vaccinating but also from the experiences of their neighbors. Thus, an individual’s community is highly important in determining what beliefs they ultimately develop.

The network epistemology framework captures some essential features missing from contagion models: individuals intentionally gather data, share data and then experience consequences for bad beliefs. The findings teach us some important lessons about the social spread of knowledge. The first thing we learn is that working together is better than working alone because someone facing a problem like this is likely to prematurely settle on the worse theory. For instance, they might observe one child who turns out to have autism after vaccination and conclude that vaccines are not safe. In a community, there tends to be some diversity in what people believe. Some test one action; some test the other. This diversity means that usually enough evidence is gathered to form good beliefs.

But even this group benefit does not guarantee that agents learn the truth. Real scientific evidence is probabilistic, of course. For example, some nonsmokers get lung cancer, and some smokers do not get lung cancer. This means that some studies of smokers will find no connection to cancer. Relatedly, although there is no actual statistical link between vaccines and autism, some vaccinated children will be autistic. Thus, some parents observe their children developing symptoms of autism after receiving vaccinations. Strings of misleading evidence of this kind can be enough to steer an entire community wrong.

In the most basic version of this model, social influence means that communities end up at consensus. They decide either that vaccinating is safe or that it is dangerous. But this does not fit what we see in the real world. In actual communities, we see polarization—entrenched disagreement about whether to vaccinate. We argue that the basic model is missing two crucial ingredients: social trust and conformism.

Social trust matters to belief when individuals treat some sources of evidence as more reliable than others. This is what we see when anti-vaxxers trust evidence shared by others in their community more than evidence produced by the Centers for Disease Control and Prevention or other medical research groups. This mistrust can stem from all sorts of things, including previous negative experiences with doctors or concerns that health care or governmental institutions do not care about their best interests. In some cases, this distrust may be justified, given that there is a long history of medical researchers and clinicians ignoring legitimate issues from patients, particularly women.

Yet the net result is that anti-vaxxers do not learn from the very people who are collecting the best evidence on the subject. In versions of the model where individuals do not trust evidence from those who hold very different beliefs, we find communities become polarized, and those with poor beliefs fail to learn better ones.

Conformism, meanwhile, is a preference to act in the same way as others in one’s community. The urge to conform is a profound part of the human psyche and one that can lead us to take actions we know to be harmful. When we add conformism to the model, what we see is the emergence of cliques of agents who hold false beliefs. The reason is that agents connected to the outside world do not pass along information that conflicts with their group’s beliefs, meaning that many members of the group never learn the truth.

Conformity can help explain why vaccine skeptics tend to cluster in certain communities. Some private and charter schools in southern California have reported vaccination rates in the low double digits. And rates have been startlingly low among Somali immigrants in Minneapolis and Orthodox Jews in Brooklyn—two communities that have suffered from measles outbreaks.

Interventions for vaccine skepticism need to be sensitive to both social trust and conformity. Simply sharing new evidence with skeptics will probably not help because of trust issues. And convincing trusted community members to speak out for vaccination might be difficult because of conformism. The best approach is to find individuals who share enough in common with members of the relevant communities to establish trust. A rabbi, for instance, might be an effective vaccine ambassador in Brooklyn, whereas in southern California, you might need to get Gwyneth Paltrow involved.

Social trust and conformity can help explain why polarized beliefs can emerge in social networks. But at least in some cases, including the Somali community in Minnesota and Orthodox Jewish communities in New York, they are only part of the story. Both groups were the targets of sophisticated misinformation campaigns designed by anti-vaxxers.

Influence Operations

How we vote, what we buy and who we acclaim all depend on what we believe about the world. As a result, there are many wealthy, powerful groups and individuals who are interested in shaping public beliefs—including those about scientific matters of fact. There is a naive idea that when industry attempts to influence scientific belief, they do it by buying off corrupt scientists. Perhaps this happens sometimes. But a careful study of historical cases shows there are much more subtle—and arguably more effective—strategies that industry, nation states and other groups utilize. The first step in protecting ourselves from this kind of manipulation is to understand how these campaigns work.

A classic example comes from the tobacco industry, which developed new techniques in the 1950s to fight the growing consensus that smoking kills. During the 1950s and 1960s the Tobacco Institute published a bimonthly newsletter called “Tobacco and Health” that reported only scientific research suggesting tobacco was not harmful or research that emphasized uncertainty regarding the health effects of tobacco.

The pamphlets employ what we have called selective sharing. This approach involves taking real, independent scientific research and curating it by presenting only the evidence that favors a preferred position. Using variants on the models described earlier, we have argued that selective sharing can be shockingly effective at shaping what an audience of nonscientists comes to believe about scientific matters of fact. In other words, motivated actors can use seeds of truth to create an impression of uncertainty or even convince people of false claims.

Selective sharing has been a key part of the anti-vaxxer playbook. Before the 2018 measles outbreak in New York, an organization calling itself Parents Educating and Advocating for Children’s Health (PEACH) produced and distributed a 40-page pamphlet entitled “The Vaccine Safety Handbook.” The information shared—when accurate—was highly selective, focusing on a handful of scientific studies suggesting risks associated with vaccines, with minimal consideration of the many studies that find vaccines to be safe.

The PEACH handbook was especially effective because it combined selective sharing with rhetorical strategies. It built trust with Orthodox Jews by projecting membership in their community (although it was published pseudonymously, at least some authors were members) and emphasizing concerns likely to resonate with them. It cherry-picked facts about vaccines intended to repulse its particular audience; for instance, it noted that some vaccines contain gelatin derived from pigs. Wittingly or not, the pamphlet was designed in a way that exploited social trust and conformism—the very mechanisms crucial to the creation of human knowledge.

Worse, propagandists are constantly developing ever more sophisticated methods for manipulating public belief. Over the past several years we have seen purveyors of disinformation roll out new ways of creating the impression—especially through social media conduits such as Twitter bots, paid trolls, and the hacking or copying of friends’ accounts—that certain false beliefs are widely held, including by your friends and others with whom you identify. Even the PEACH creators may have encountered this kind of synthetic discourse about vaccines. According to a 2018 article in the American Journal of Public Health, such disinformation was distributed by accounts linked to Russian influence operations seeking to amplify American discord and weaponize a public health issue. This strategy works to change minds not through rational arguments or evidence but simply by manipulating the social spread of knowledge and belief.

The sophistication of misinformation efforts (and the highly targeted disinformation campaigns that amplify them) raises a troubling problem for democracy. Returning to the measles example, children in many states can be exempted from mandatory vaccinations on the grounds of “personal belief.” This became a flash point in California in 2015 following a measles outbreak traced to unvaccinated children visiting Disneyland. Then governor Jerry Brown signed a new law, SB277, removing the exemption.

Immediately vaccine skeptics filed paperwork to put a referendum on the next state ballot to overturn the law. Had they succeeded in getting 365,880 signatures (they made it to only 233,758), the question of whether parents should be able to opt out of mandatory vaccination on the grounds of personal belief would have gone to a direct vote—the results of which would have been susceptible to precisely the kinds of disinformation campaigns that have caused vaccination rates in many communities to plummet.

Luckily, the effort failed. But the fact that hundreds of thousands of Californians supported a direct vote about a question with serious bearing on public health, where the facts are clear but widely misconstrued by certain activist groups, should give serious pause. There is a reason that we care about having policies that best reflect available evidence and are responsive to reliabale new information. How do we protect public well-being when so many citizens are misled about matters of fact? Just as individuals acting on misinformation are unlikely to bring about the outcomes they desire, societies that adopt policies based on false belief are unlikely to get the results they want and expect.

The way to decide a question of scientific fact—are vaccines safe and effective?—is not to ask a community of nonexperts to vote on it, especially when they are subject to misinformation campaigns. What we need is a system that not only respects the processes and institutions of sound science as the best way we have of learning the truth about the world but also respects core democratic values that would preclude a single group, such as scientists, dictating policy.

We do not have a proposal for a system of government that can perfectly balance these competing concerns. But we think the key is to better separate two essentially different issues: What are the facts, and what should we do in light of them? Democratic ideals dictate that both require public oversight, transparency and accountability. But it is only the second—how we should make decisions given the facts—that should be up for a vote.