Might we humans be the only species on this planet to be truly conscious? Might lobsters and lions, beetles and bats be unconscious automata, responding to their worlds with no hint of conscious experience? Aristotle thought so, claiming that humans have rational souls but that other animals have only the instincts needed to survive. In medieval Christianity the “great chain of being” placed humans on a level above soulless animals and below only God and the angels. And in the 17th century French philosopher René Descartes argued that other animals have only reflex behaviors. Yet the more biology we learn, the more obvious it is that we share not only anatomy, physiology and genetics with other animals but also systems of vision, hearing, memory and emotional expression. Could it really be that we alone have an extra special something—this marvelous inner world of subjective experience?

The question is hard because although your own consciousness may seem the most obvious thing in the world, it is perhaps the hardest to study. We do not even have a clear definition beyond appealing to a famous question asked by philosopher Thomas Nagel back in 1974: What is it like to be a bat? Nagel chose bats because they live such very different lives from our own. We may try to imagine what it is like to sleep upside down or to navigate the world using sonar, but does it feel like anything at all? The crux here is this: If there is nothing it is like to be a bat, we can say it is not conscious. If there is something (anything) it is like for the bat, it is conscious. So is there?

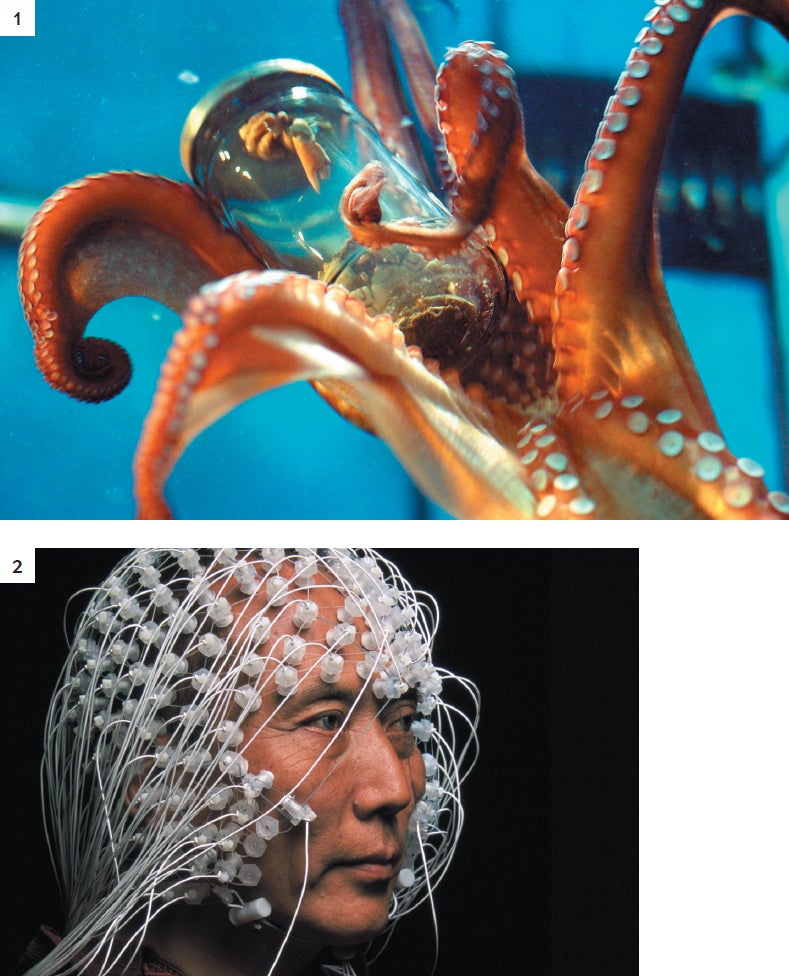

We share a lot with bats: we, too, have ears and can imagine our arms as wings. But try to imagine being an octopus. You have eight curly, grippy, sensitive arms for getting around and catching prey but no skeleton, and so you can squeeze yourself through tiny spaces. Only a third of your neurons are in a central brain; the rest are in the nerve cords in each of your eight arms, one for each arm. Consider: Is it like something to be a whole octopus, to be its central brain or to be a single octopus arm? The science of consciousness provides no easy way of finding out.

Even worse is the “hard problem” of consciousness: How does subjective experience arise from objective brain activity? How can physical neurons, with all their chemical and electrical communications, create the feeling of pain, the glorious red of the sunset or the taste of fine claret? This is a problem of dualism: How can mind arise from matter? Indeed, does it?

The answer to this question divides consciousness researchers down the middle. On one side is the “B Team,” as philosopher Daniel C. Dennett described them in a heated debate. Members of this group agonize about the hard problem and believe in the possibility of the philosopher's “zombie,” an imagined creature that is indistinguishable from you or me but has no consciousness. Believing in zombies means that other animals might conceivably be seeing, hearing, eating and mating “all in the dark” with no subjective experience at all. If that is so, consciousness must be a special additional capacity that we might have evolved either with or without and, many would say, are lucky to have.

On the other side is the A Team: scholars who reject the possibility of zombies and think the hard problem is, to quote philosopher Patricia Churchland, a “hornswoggle problem” that obfuscates the issue. Either consciousness just is the activity of bodies and brains, or it inevitably comes along with everything we so obviously share with other animals. In the A team's view, there is no point in asking when or why “consciousness itself” evolved or what its function is because “consciousness itself” does not exist.

Suffering

Why does it matter? One reason is suffering. When I accidentally stamped on my cat's tail and she screeched and shot out of the room, I was sure I had hurt her. Yet behavior can be misleading. We could easily place pressure sensors in the tail of a robotic cat to activate a screech when stepped on—and we would not think it suffered pain. Many people become vegetarians because of the way farm animals are treated, but are those poor cows and pigs pining for the great outdoors? Are battery hens suffering horribly in their tiny cages? Behavioral experiments show that although hens enjoy scratching about in litter and will choose a cage with litter if access is easy, they will not bother to push aside a heavy curtain to get to it. So do they not much care? Lobsters make a terrible screaming noise when boiled alive, but could this just be air being forced out of their shells?

When lobsters or crabs are injured, are taken out of water or have a claw twisted off, they release stress hormones similar to cortisol and corticosterone. This response provides a physiological reason to believe they suffer. An even more telling demonstration is that when injured prawns limp and rub their wounds, this behavior can be reduced by giving them the same painkillers as would reduce our own pain.

The same is true of fish. When experimenters injected the lips of rainbow trout with acetic acid, the fish rocked from side to side and rubbed their lips on the sides of their tank and on gravel, but giving them morphine reduced these reactions. When zebra fish were given a choice between a tank with gravel and plants and a barren one, they chose the interesting tank. But if they were injected with acid and the barren tank contained a painkiller, they swam to the barren tank instead. Fish pain may be simpler or in other ways different from ours, but these experiments suggest they do feel pain.

.jpg)

Some people remain unconvinced. Australian biologist Brian Key argues that fish may respond as though they are in pain, but this observation does not prove they are consciously feeling anything. Noxious stimuli, he asserted in the open-access journal Animal Sentience, “don't feel like anything to a fish.” Human consciousness, he argues, relies on signal amplification and global integration, and fish lack the neural architecture that makes these connections possible. In effect, Key rejects all the behavioral and physiological evidence, relying on anatomy alone to uphold the uniqueness of humans.

A World of Different Brains

If such studies cannot resolve the issue, perhaps comparing brains might help. Could humans be uniquely conscious because of their large brains? British pharmacologist Susan Greenfield proposes that consciousness increases with brain size across the animal kingdom. But if she is right, then African elephants and grizzly bears are more conscious than you are, and Great Danes and Dalmatians are more conscious than Pekinese and Pomeranians, which makes no sense.

More relevant than size may be aspects of brain organization and function that scientists think are indicators of consciousness. Almost all mammals and most other animals—including many fish and reptiles and some insects—alternate between waking and sleeping or at least have strong circadian rhythms of activity and responsiveness. Specific brain areas, such as the lower brain stem in mammals, control these states. In the sense of being awake, therefore, most animals are conscious. Still, this is not the same as asking whether they have conscious content: whether there is something it is like to be an awake slug or a lively lizard.

Many scientists, including Francis Crick and, more recently, British neuroscientist Anil Seth, have argued that human consciousness involves widespread, relatively fast, low-amplitude interactions between the thalamus, a sensory way station in the core of the brain, and the cortex, the gray matter at the brain's surface. These “thalamocortical loops,” they claim, help to integrate information across the brain and thereby underlie consciousness. If this is correct, finding these features in other species should indicate consciousness. Seth concludes that because other mammals share these structures, they are therefore conscious. Yet many other animals do not: lobsters and prawns have no cortex or thalamocortical loops, for example. Perhaps we need more specific theories of consciousness to find the critical features.

Among the most popular is global workspace theory (GWT), originally proposed by American neuroscientist Bernard Baars. The idea is that human brains are structured around a workspace, something like working memory. Any mental content that makes it into the workspace, or onto the brightly lit “stage” in the theater of the mind, is then broadcast to the rest of the unconscious brain. This global broadcast is what makes individuals conscious.

This theory implies that animals with no brain, such as starfish, sea urchins and jellyfish, could not be conscious at all. Nor could those with brains that lack the right global workspace architecture, including fish, octopuses and many other animals. Yet, as we have already explored, a body of behavioral evidence implies that they are conscious.

Integrated information theory (IIT), originally proposed by neuroscientist Giulio Tononi, is a mathematically based theory that defines a quantity called Φ (pronounced “phi”), a measure of the extent to which information in a system is both differentiated into parts and unified into a whole. Various ways of measuring Φ lead to the conclusion that large and complex brains like ours have high Φ, deriving from amplification and integration of neural activity widely across the brain. Simpler systems have lower Φ, with differences also arising from the specific organization found in different species. Unlike global workspace theory, IIT implies that consciousness might exist in simple forms in the lowliest creatures, as well as in appropriately organized machines with high Φ.

Both these theories are currently considered contenders for a true theory of consciousness and ought to help us answer our question. But when it comes to animal consciousness, their answers clearly conflict.

The Evolving Mind

Thus, our behavioral, physiological and anatomical studies all give mutually contradictory answers, as do the two most popular theories of consciousness. Might it help to explore how, why and when consciousness evolved?

Here again we meet that gulf between the two groups of researchers. Those in the B Team assume that because we are obviously conscious, consciousness must have a function such as directing behavior or saving us from predators. Yet their guesses as to when consciousness arose range from billions of years ago right up to historical times.

For example, psychiatrist and neurologist Todd Feinberg and biologist Jon Mallatt proffer, without giving compelling evidence, an opaque theory of consciousness involving “nested and nonnested” neural architectures and specific types of mental images. These, they claim, are found in animals from 560 million to 520 million years ago. Baars, the author of global workspace theory, ties the emergence of consciousness to that of the mammalian brain around 200 million years ago. British archaeologist Steven Mithen points to the cultural explosion that started 60,000 years ago when, he contends, separate skills came together in a previously divided brain. Psychologist Julian Jaynes agrees that a previously divided brain became unified but claims this happened much later. Finding no evidence of words for consciousness in the Greek epic the Iliad, he concludes that the Greeks were not conscious of their own thoughts in the same way that we are, instead attributing their inner voices to the gods. Therefore, Jayne argues, until 3,000 years ago people had no subjective experiences.

Are any of these ideas correct? They are all mistaken, claim those in the A Team, because consciousness has no independent function or origin: it is not that kind of thing. Team members include “eliminative materialists” such as Patricia and Paul Churchland, who maintain that consciousness just is the firing of neurons and that one day we will come to accept this just as we accept that light just is electromagnetic radiation. IIT also denies a separate function for consciousness because any system with sufficiently high Φ must inevitably be conscious. Neither of these theories makes human consciousness unique, but one final idea might.

This is the well-known, though much misunderstood, claim that consciousness is an illusion. This approach does not deny the existence of subjective experience but claims that neither consciousness nor the self are what they seem to be. Illusionist theories include psychologist Nicholas Humphrey's idea of a “magical mystery show” being staged inside our heads. The brain concocts out of our ongoing experiences, he posits, a story that serves an evolutionary purpose in that it gives us a reason for living. Then there is neuroscientist Michael Graziano's attention schema theory, in which the brain builds a simplified model of how and to what it is paying attention. This idea, when linked to a model of self, allows the brain—or indeed any machine—to describe itself as having conscious experiences.

By far the best-known illusionist hypothesis, however, is Dennett's “multiple drafts theory”: brains are massively parallel systems with no central theater in which “I” sit viewing and controlling the world. Instead multiple drafts of perceptions and thoughts are continually processed, and none is either conscious or unconscious until the system is probed and elicits a response. Only then do we say the thought or action was conscious; thus, consciousness is an attribution we make after the fact. He relates this to the theory of memes. (A meme is information copied from person to person, including words, stories, technologies, fashions and customs.) Because humans are capable of widespread generalized imitation, we alone can copy, vary and select among memes, giving rise to language and culture. “Human consciousness is itself a huge complex of memes,” Dennett wrote in Consciousness Explained, and the self is a “'benign user illusion.”

This illusory self, this complex of memes, is what I call the “selfplex.” An illusion that we are a powerful self that has consciousness and free will—which may not be so benign. Paradoxically, it may be our unique capacity for language, autobiographical memory and the false sense of being a continuing self that serves to increase our suffering. Whereas other species may feel pain, they cannot make it worse by crying, “How long will this pain last? Will it get worse? Why me? Why now?” In this sense, our suffering may be unique. For illusionists such as myself, the answer to our question is simple and obvious. We humans are unique because we alone are clever enough to be deluded into believing that there is a conscious “I.”