Three years ago, a team of psychologists challenged 180 students with a spatial puzzle. The students could ask for a hint if they got stuck. But before the test, the researchers introduced some subtle interventions to see whether these would have any effect.

The psychologists split the volunteers into three groups, each of which had to unscramble some words before doing the puzzle. One group was the control, another sat next to a pile of play money and the third was shown scrambled sentences that contained words relating to money.

The study, published this June, was a careful repeat of a widely cited 2006 experiment. The original had found that merely giving students subtle reminders of money made them work harder: in this case, they spent longer on the puzzle before asking for help. That work was one among scores of laboratory studies which argued that tiny subconscious cues can have drastic effects on our behaviour.

Known by the loosely defined terms ‘social priming’ or ‘behavioural priming’, these studies include reports that people primed with ‘money’ are more selfish; that those primed with words related to professors do better on quizzes; and even that people exposed to something that literally smells fishy are more likely to be suspicious of others.

The most recent replication effort, however, led by psychologist Doug Rohrer at the University of South Florida in Tampa, found that students primed with ‘money’ behave no differently on the puzzle task from the controls. It is one of dozens of failures to verify earlier social-priming findings. Many researchers say they now see social priming not so much as a way to sway people’s unconscious behaviour, but as an object lesson in how shaky statistical methods fooled scientists into publishing irreproducible results.

This is not the only area of research to be dented by science’s ‘replication crisis’. Failed replication attempts have cast doubt on findings in areas from cancer biology to economics. But so many findings in social priming have been disputed that some say the field is close to being entirely discredited. “I don’t know a replicable finding. It’s not that there isn’t one, but I can’t name it,” says Brian Nosek, a psychologist at the University of Virginia in Charlottesville, who has led big replication studies. “I’ve gone from full believer to full sceptic,” adds Michael Inzlicht, a psychologist at the University of Toronto, Canada, and an associate editor at the journal Psychological Science.

Some psychologists say the pendulum has swung too far against social priming. Among these are veterans of the field who insist that their findings remain valid. Others accept that many of the earlier studies are in doubt, but say there’s still value in social priming’s central idea. It is worth studying whether it’s possible to affect people’s behaviour using subtle, low-cost interventions—as long as the more-outlandish and unsupported claims can be weeded out, says Esther Papies, a psychologist at the University of Glasgow, UK.

Equipped with more-rigorous statistical methods, researchers are finding that social-priming effects do exist, but seem to vary between people and are smaller than first thought, Papies says. She and others think that social priming might survive as a set of more modest, yet more rigorous, findings. “I’m quite optimistic about the field,” she says.

Rise and fall

The roots of the priming phenomenon go back to the 1970s, when psychologists showed that people get faster at recognizing and processing words if they are primed by related ones. For instance, after seeing the word ‘doctor’, they recognized ‘nurse’ faster than they did unrelated words. This ‘semantic’ priming is now well established.

But in the 1980s and 1990s, researchers argued that priming could affect attitudes and behaviours. Priming individuals with words related to ‘hostility’ made them more likely to judge the actions of a character in a story as hostile, a 1979 study found. And in 1996, John Bargh, a psychologist at New York University in New York City found that people primed with words conventionally related to age in the United States—‘bingo’, ‘wrinkle’, ‘Florida’—walked more slowly than the control group as they left the lab, as if they were older.

Dozens more studies followed, finding that priming could affect how people performed at general-knowledge quizzes, how generous they were or how hard they worked at tasks. These behavioural examples became known as social priming, although the term is disputed because there is nothing obviously social about many of them. Others prefer ‘behavioural priming’ or ‘automatic behaviour priming’.

In his 2011 best-seller Thinking, Fast and Slow, Nobel-prizewinning psychologist Daniel Kahneman mentioned several of the best-known priming studies. “Disbelief is not an option,” he wrote of them. “The results are not made up, nor are they statistical flukes. You have no choice but to accept that the major conclusions of these studies are true.”

But concerns were starting to surface. That same year, Daryl Bem, a social psychologist at Cornell University in Ithaca, New York, published a study suggesting that students could predict the future. Bem’s analysis relied on statistical techniques that psychologists regularly used. “I remember reading it and thinking ‘Fuck. If we can do this, we have a problem,’” says Hans IJzerman, a social psychologist at the University of Grenoble Alps in Grenoble, France.

Also that year, three other researchers published a deliberately absurd finding: that those who listened to the Beatles song ‘When I’m Sixty-Four’ literally became younger than a control group that listened to a different song. They achieved this result by analysing their data in many different ways, getting a statistically significant result in one of them by simple fluke, and then not reporting the other attempts. Such practices, they said, were common in psychology and allowed researchers to find whatever they wanted, given some noisy data and small sample sizes.

The papers had an explosive impact. Replication efforts that cast doubt on key findings started to appear, including a 2012 report that repeated Bargh’s ageing study and found no effect of priming unless the people observing the experiment were told what to expect. It did not help that this all took place as it was discovered that a leading social psychologist in the Netherlands, Diederik Stapel, had been faking data for years.

In 2012, Kahneman wrote an open letter to Bargh and other “students of social priming”, warning that “a train wreck” was approaching. Despite being a “general believer” in the research, Kahneman worried that fraud such as Stapel’s, replication failures and a tendency for negative results not to get published had created “a storm of doubt”.

Seven years later, the storm has uprooted many of social priming’s flagship findings. Eric-Jan Wagenmakers, a psychologist at the University of Amsterdam, says that when he read the relevant part of Kahneman’s book, “I was like, ‘not one of these studies will replicate.’ And so far, nothing has.”

Psychologist Eugene Caruso reported in 2013 that reminding people of the concept of money made them more likely to endorse free-market capitalism. Now at the University of California, Los Angeles, Caruso says that having tried bigger and more systematic tests of the effects, “there does not seem to be robust support for them”. Ap Dijksterhuis, a researcher at Radboud University in Nijmegen, the Netherlands, says that his paper suggesting that students primed with the word ‘professor’ do better at quizzes “did not pass the test of time”.

Kahneman told Nature: “I am not up to date on the most recent developments, so should not comment.”

Researchers had been whispering about not being able to repeat big findings years before the priming bubble began to burst, says Nosek. Afterwards, in lessons shared with science’s wider replication crisis, it became clear that many of the problematic findings were probably statistical noise—fluke results garnered from studies on too-small groups of people—rather than the result of fraud. It seems that many researchers were not alert to how easy it is to find significant-looking but spurious results in noisy data. This is especially so if researchers ‘HARK’ (Hypothesize After Results are Known)—that is, change their hypotheses after looking at their data. The fact that journals tend not to publish null results didn’t help, because it meant the only findings that got through were the surprising ones.

There is also evidence that subconscious experimenter effects have been a problem, Papies says: one study found that when experimenters were aware of the priming effect they were looking for, they were much more likely to find it, suggesting that, subconsciously, they would affect the results in some way.

Since then, there have been widespread moves throughout psychology to improve research methods. These include pre-registering study methods before looking at data, which prevents HARKing, and working with larger groups of volunteers. Nosek, for instance, has led the Many Labs project, in which undergraduates at dozens of labs try to replicate the same psychology studies, giving sample sizes of thousands. On average, about half of the papers that Many Labs looks at can be replicated successfully. Other collaborative efforts include the Psychological Science Accelerator, a network of labs that work together to replicate influential studies.

The new social priming

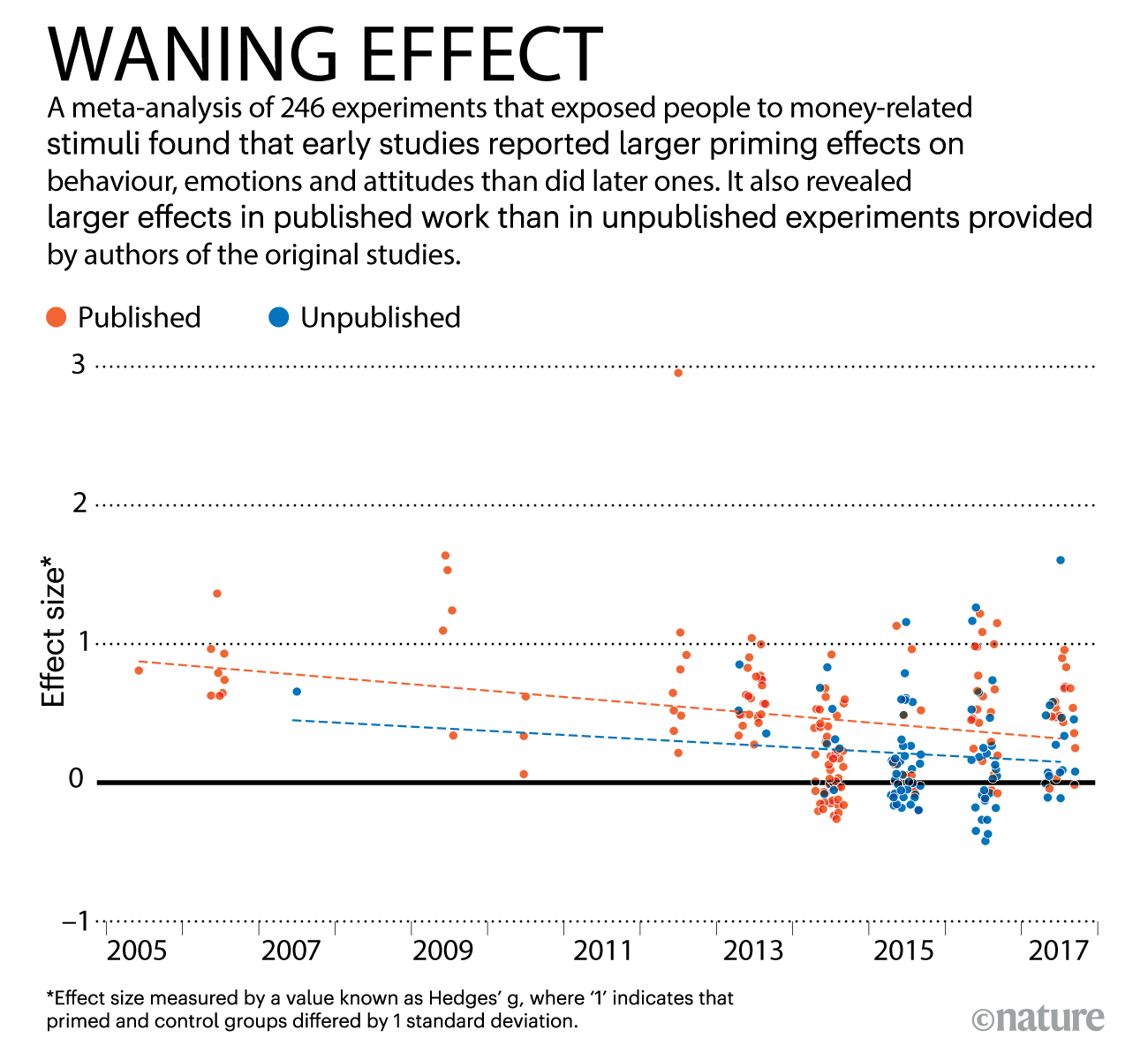

Today, much of the work being done in social priming involves replications of earlier work, or meta-analyses of multiple papers to try to tease out what still holds true. A meta-analysis of hundreds of studies on many kinds of money priming, reported this April, found little evidence for the large effects the early studies claimed. It also found larger effects in published studies than in unpublished experiments that had been shared with the authors of the meta-analysis (see ‘Waning effect’).

Original work hasn’t dried up entirely, says Papies, although the focus is changing. Much of the high-profile social-priming work of the past was designed to find huge, universal effects, she says. Instead, her group’s studies focus on finding smaller effects in the subset of people who already care about the thing being primed. She has found that people who want to become thinner are more likely to make healthy food choices if they are primed, say, with words on a menu such as ‘diet’, ‘thin’ and ‘trim figure’. But it works only in people for whom a healthy diet is a central goal; it doesn’t make everyone avoid fattening foods.

This matches the findings of a meta-analysis from 2015, led by psychologist Dolores Albarracín at the University of Illinois at Urbana–Champaign. It looked at 352 priming studies that involved presenting words to people, and it found evidence of real, if small, effects when the prime was related to a goal that the participants cared about. That analysis, however, deliberately looked only at experiments in which the priming words were directly related to the claimed effect, such as rudeness-related words leading to ruder behaviour or attitudes. It avoided looking at studies with primes that had what it termed ‘metaphorical’ meaning—including the ageing-related words that Bargh said led to slower walking, or the money-related priming work.

Research into priming has declined, however, and what is considered priming is not always the same as the startling claims of the 1990s and 2000s. “There’s a lot less than there was five or ten years ago,” says Antonia Hamilton, a neuroscientist at University College London, who still works on priming. Partly, she says, that’s because of the replication problems: “We do less since it all blew up. It’s harder to make people believe it and there are other topics that are easier to study.” It might also be simply that the topic has become less fashionable, she says.

Hamilton’s own work involves, among other things, putting people in functional magnetic resonance imaging (fMRI) scanners to see how priming affects brain activity. In one 2015 study, she used a scrambled-sentence task to prime ‘prosocial’ ideas (such as ‘helping’) and ‘antisocial’ ones (such as ‘annoying’), seeing whether it made participants quicker to mimic other people’s actions, and whether there were detectable differences in brain scans.

Using fMRI is only practical with small numbers of volunteers, so she looks at how the same people respond when they have been primed and when they haven’t: a ‘within-subjects’ design, in contrast to the ‘between-subjects’ design of priming studies that use a control group. The design means that researchers don’t have to worry about pre-existing differences between groups, Hamilton says. Her research has found priming effects: people primed with prosocial concepts behave in more prosocial ways, and fMRI scans did show differences in activity in brain areas such as the medial prefrontal cortex, which is involved in regulating social behaviours. But, she says, the effects are more modest than those the classic priming studies found.

Some researchers say that however efforts to test older results pan out, the concept of social or behavioural priming still has merit. “I still have no doubts whatsoever that in real life, behaviour priming works, despite the fact that in the old days, we didn’t study it properly relative to current standards,” says Dijksterhuis.

Bargh says that despite many researchers now discounting them, important early advances do exist—such as his own 2008 study, which reported that holding warm coffee made people behave more warmly towards others. Direct replications have failed to support the result, but Bargh says that a link between physical warmth and social warmth has been demonstrated in other work, including neuroimaging studies. “People say we should just throw out all the work before 2010, the work of people my age and older,” says Bargh, “and I don’t see how that’s justified.” He and Norbert Schwarz, a psychologist at the University of Southern California in Los Angeles, say that there have been replications of their earlier social-priming results—although critics counter that these were not direct replications but ‘conceptual’ ones, in which researchers test a concept using related experimental set-ups.

Bargh says that results of social priming are still widely believed and used by non-academics, such as political campaigners and business marketers, even when they are sceptical. Gary Latham, for instance, an organizational psychologist at the University of Toronto in Canada, says: “I strongly disliked Bargh’s findings and wanted to show it doesn’t work.” Despite this, he says, he has for ten years consistently found that priming phone marketers with words related to ideas of success and winning increases the amount of money they make. But Leif Nelson, a psychologist at the University of California, Berkeley, emphasizes that whether or not social-priming ideas are subsequently confirmed, the classic studies in the field were not statistically powerful enough to detect the things they claimed to find.

Bargh sees positives and negatives in how psychology research has changed. “If pre-registration stops people from HARKing, then I guess it’s good,” he says, “but it always struck me as an insult. ‘We don’t trust you to be honest’; it feels like we’re being treated like criminals, wearing ankle bracelets.”

Others disagree. The move towards open, reproducible science, according to most psychologists, has been a huge success. Social priming as a field might survive, but if it does not, then at least its high-profile problems have been crucial in forcing psychology to clean up its act. “I have to say I am pleasantly surprised by how far the field has come in eight years,” says Wagenmakers. “It’s been a complete change in how people do things and interpret things.”

This article is reproduced with permission and was first published on December 11 2019.